In Defense of Progress

A familiar pattern is reoccurring. We figure out some new technology with great potential. Then we self-sabotage because we over-index on some problems we can see it causing in the future. There are multiple instances in the past of people making incredible discoveries only to have it end terribly for them. Galileo and Ignaz Semmelweis come to mind.

Atomic Blues

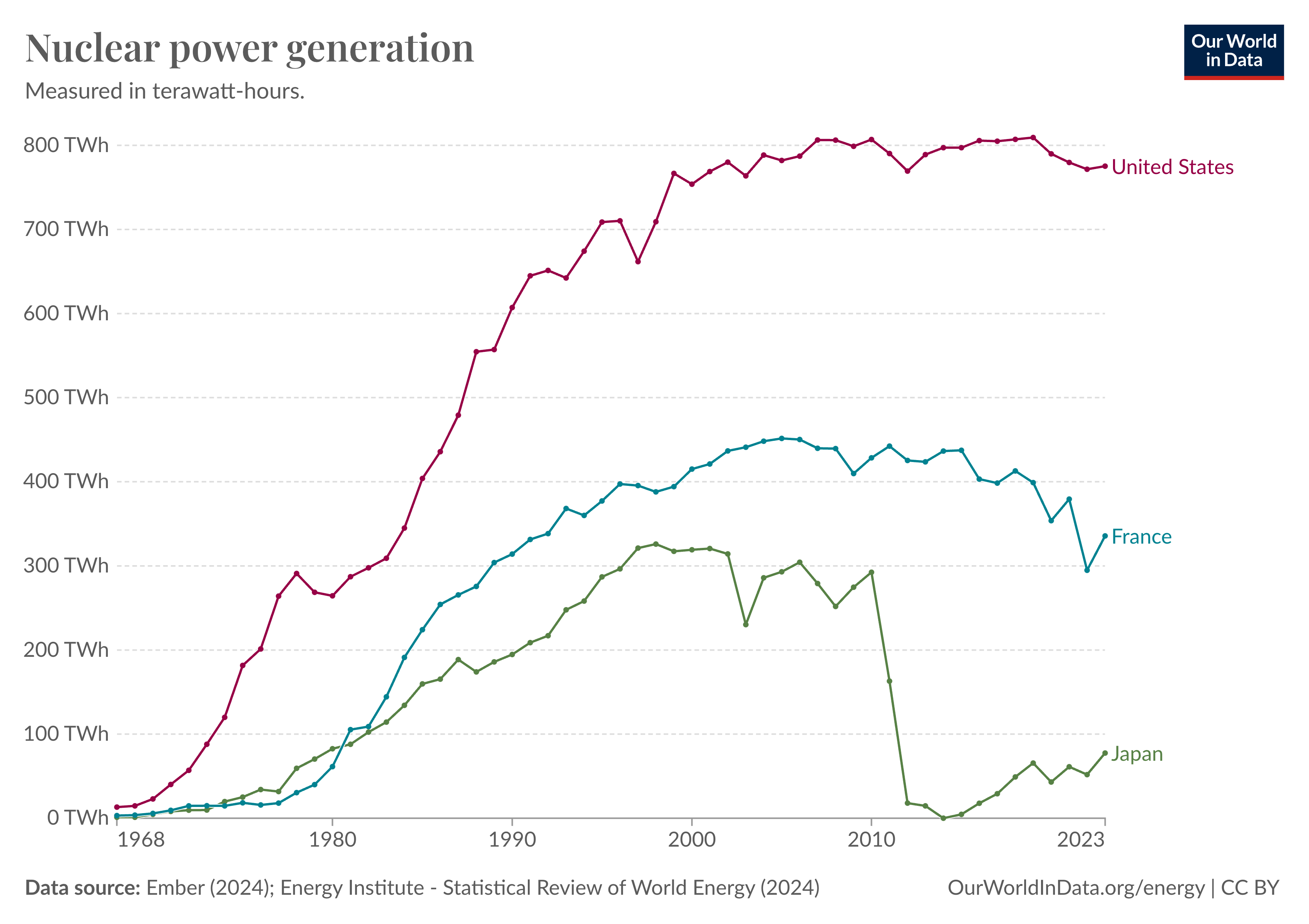

In the 1990s, nuclear energy development ground to a halt in the U.S. due to environmental concerns. The world is worse off for it and significant delays to progress1 can likely be attributed to this pause. Until recently, this led to zero-sum approaches when thinking about growing energy supply with hydrocarbons.

New energy is bad for the environment. But New energy is required to grow the economy. Thus, economy is bad for the environment. Checkmate.2

There are similar examples in the Pessimists Archive. Photography, novels, bicycles, and (of course) vaccines.

Smartphones and Social Media

Growing up in a communal setting, you get compared to other kids. You never even want to be the gold standard kid, because now you're not allowed to make mistakes.

What happens when the internet allows parents to continuously brag about their kids in Whatsapp groups? How about in a curated feed on Instagram? What if the algorithm rewards your parents for sharing the chaos (you) of running a family.3

This is only one of the many ills that came about with social media. But what happens if we don't allow the good things to manifest?

Civilization is an exhibition in complex coordination. Aqueducts made it such that you didn't have to site a city too close to a river or stream. The U.S. interstate highway system gave us drive-thru fast food, suburbs, shopping malls, etc. GPS and smartphones unlocked map-based applications like food delivery and ride sharing. The internet has revolutionized commerce, entertainment, education, and many more things than I can list here.

My mother, in her 80s, has an important errand she's been trying to do but after borrowing a car to drive to the place where she can do it, she had to turn around & go back because the parking meters there wouldn't accept coins & required an app she couldn't figure out.

— Dana Stevens (is on Bl\*\*sky now under same handle) (@thehighsign) May 15, 2023

And things will only get more complex...

AI and the internet

The current scapegoats are LLMs. They are the perfect tool for sifting through the deluge of information that bombards us daily. Organizations after a certain scale use machine learning to personalize outcomes.4 It's hard to navigate a space if you didn't put the stuff there. You can signify your intent by choosing who you connect with. However, surfacing relevant information requires you to know what is already there. Time of creation is not a good way to do that. Fintech companies scale to using ML for fraud prevention and detection.

Yet, every so often, a new letter or legislative bill is released with the aim of pausing development or releasing weights.5

Social media ebbs and flows. Memetic tides and currents that determine the current thing. After which, everyone hurries to chime in. Partaking in the internet without an AI assistant is like being in a sailboat in the ocean with no training. You might figure it out. But most people will tip over and end up swarming together to stay alive. AI gives everyone a motor to work with. Training is still useful but you don't necessarily need it to set a direction and make your way there. The winds and currents veer you off your charted path. But you can always course correct because you have a motor whose speed you can control.

Maps are a great corollary. We don't order geographies by most-recently added information. We do so by layering abstractions.6,7 Maps improve over time. We know this because maps become more useful over time, not because we see the thing making it more useful. LLM-powered applications will help us find the important needles in our ever-expanding haystack.

“The future of modern society and the stability of its inner life depend in large part of the maintenance of an equilibrium between the strength of the techniques of communication and the capacity of the individual’s own reaction.” Pope Pius XII8

LLMs are trained on internet-scale data. LLM-powered experiences will become better suited to doing things on the internet.

Complexity is increasing. Savvy people will embrace the complexity sooner than most. This will increase the complexity for everyone else. We need to build tools that help people engage in a world that will become more complicated.

In Closing

I previously wrote about how driving requires so many decisions that interact with other decisions. A lot of those decisions are made passively (muscle memory on familiar routes). Writing freed up personal memory. AI advancements will free up decision-making so we can focus on the most important decisions we choose to make in our daily lives.9

"Consider writing, perhaps the first information technology: The ability to capture a symbolic representation of spoken language for long-term storage freed information from the limits of individual memory. Today this technology is ubiquitous in industrialized countries. Not only do books, magazines and newspapers convey written information, but so do street signs, billboards, shop signs and even graffiti. Candy wrappers are covered in writing. The constant background presence of these products of "literacy technology" does not require active attention, but the information to be conveyed is ready for use at a glance. It is difficult to imagine modern life otherwise." Mark Wieser10

This problem has been around. It is getting exacerbated. Why are we scare mongering11 about the fix?

We've been down this road before.12 Perhaps, we can do better this time.

...

Thanks to Surakat Kudehinbu for reading the draft of this.

Footnotes

-

Power generation has grown tremendously since the industrial revolution, but we still have about 3 orders of magnitude to grow to cross into K1 on the Kardashev scale. ↩

-

I'm talking about degrowth. ↩

-

Here's a release from Meta about algorithmic feeds. ↩

-

This New Yorker piece captures some of their thinking. ↩

-

A really interesting write up of how 500 18th century priests perceived their geographies and the maps that tell the tale. ↩

-

A talk about the maps we still have to make. ↩

-

This quote is from Understanding Media: The Extensions of Man by Marshall McLuhan. ↩

-

A counter argument I've heard a lot in response to freeing up decision-making time is typically: "Life isn't meant to be free of decisions. Doing mundane things is part of life." I agree. What technology does is allow us to choose which of these decisions we want to make and which mundane decisions we are fine to continuously make. When I walk to work, I want Google Maps or Waze to tell me the optimal path to get there. When I take a leisurely walk, in the same city, I want to make decisions about every turn I take myself because it increases my chances of encountering something interesting that I typically wouldn't notice when walking the same path for different purposes. I think this gets at a larger point about how we use technology. For example, social media makes it apparent that there are a lot of people who have the wrong politics online. But people have always had differing opinions about things. Those opinions just never had global reach. We have a choice between finding and engaging with the people we agree with or arguing with the people who are wrong. Algorithms tuned for engagement surface the wrongthink to us because it gets us to create more content in response. Again, we can simply choose to ignore it. ↩

-

This quote captures an under appreciated phenomenon about technology. It eventually blends into the background and we stop seeing it. Magic. It is from The Computer for the 21st Century(1991) ↩

-

LLMs are relatively harmless because they're prediction engines that rely on patterns from their training data. They are impressive but can't perform meaningful feats of what you would consider reasoning without guidance and additional scaffolding (like memory and code execution sandboxes). They're definitely not sentient. At least for now… I reckon the acceleration and consolidation activity we see with big AI labs is likely due to this realization. They are taking their foot off the brake (trimming their safety organizations) and are trying to compete on product with big tech now that the biggest models are on par with their open-source competitors. Time will tell if this is a wise approach. ↩

-

Some reading on a 1970s proposal for a project (that didn't happen) to build 1000 nuclear plants by 2000, Project Independence. And a tweet about France bucking the trend and continuing to grow energy production with nuclear without hurting the planet. ↩